Links

"What’s the Matter with Abundance?" Malcolm Harris' worthwhile critique of Ezra Klein and Derek Thompson's "Abundance"

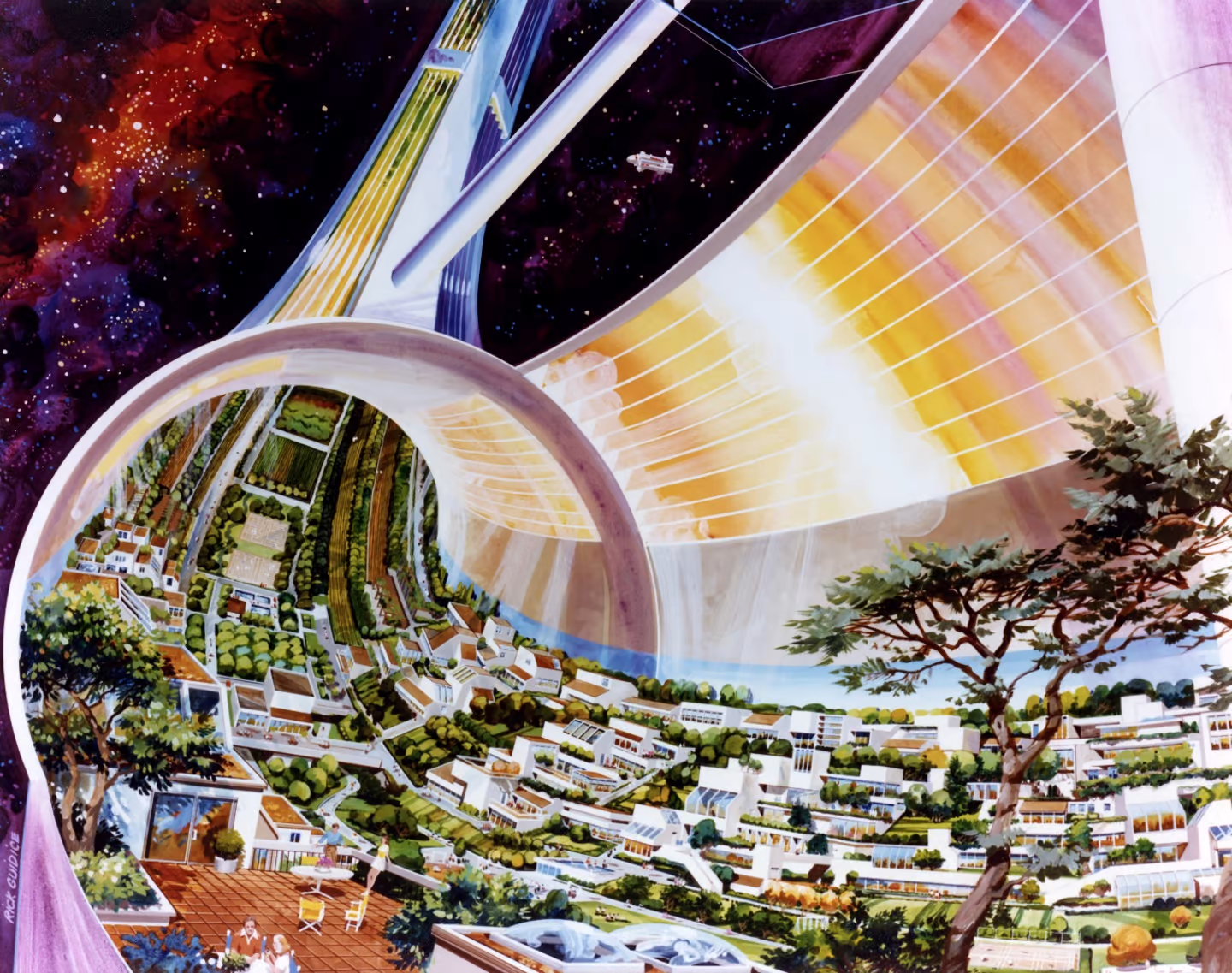

Cutaway view of a toroidal colony, by Rick Guidice. Source: NASA Ames Research Center

Cutaway view of a toroidal colony, by Rick Guidice. Source: NASA Ames Research CenterAbundance is the prefab, catch-all alternative to these forms of scarcity-thinking on “both the socialist left and the populist authoritarian right.” Large increases in material output, we are assured, can save liberalism from the civilizational choice between socialism and barbarism. I disagree; refusing to be forthright about society’s structural antagonisms opens the door to demagogues who peddle false conflicts that still ring truer than the liberals’ false peace.

The Abundance authors are hesitant to enumerate the tradeoffs their agenda will require. Prompted by the work of degrowth advocate Jason Hickel, they consider whether we should shut off or scale down destructive sectors of production, such as military investment, meat and dairy production, advertising, and fast fashion. “There is some appeal to this,” they write. “All of us can identify some aspect of the global production system that seems wasteful, unnecessary, or harmful. The problem is that few of us identify the same aspects of the global production system.” Hamburgers, they inform us, are popular in America. As is advertising, I suppose, insofar as we see a lot of it. But why can’t decent liberals like Klein and Thompson bring themselves to interrogate America’s trillion-dollar defense budget? It can’t just be an issue of popularity; after all, there’s nothing Americans like better than living in single-family homes, and the authors aren’t too afraid to call for the return of boarding houses. This country’s bombs don’t merely “seem” wasteful, unnecessary, or harmful. And disarmament is not scarcity—on the contrary.

The two-lane road to hell is paved with good intentions: why an all-or-none approach to generative AI, integrity, and assessment is insupportable

Unlimited genAI use (Lane 2/All), if applied to students in their early stages of higher education, is akin to handing a teenager the keys to a high-powered sports car and saying ‘have fun’. This approach ignores the fundamental need to educate students to build skills incrementally within sensible limits.

A helpful, considered article by Guy Curtis on the limitations of the two-lanes for AI use and higher ed assessment heuristic.

The $1m cactus heist that led to a smuggler's downfall

Reaching out of nooks in the cracked crust along the desert’s coast, there lie thousands of Copiapoa cacti. A cactus group made up of more than 30 species, Copiapoa are found only in Chile. They grow a mere centimetre each year in scorching, breathtaking desert conditions by absorbing the local evening fog, known as camanchaca.

These rare, aubergine-shaped succulents exemplify life’s ability to adapt to extremes – one of the traits that has made them highly sought after by plant collectors.

They’ve also just been at the heart of a landmark trial over an international cactus heist that might revolutionise how biodiversity crimes are dealt with the world over.

Operation Atacama: The $1m cactus heist that led to a smuggler’s downfall - BBC

Best sentence I’ve read in a while, courtesy of A S Hamrah:

British movies these days—from good ones like The Old Oak to OK ones like Bird to wretched ones like Saltburn—present British people as ruthlessly mean to each other, petty, conniving, classist, vulgar shits who add “innit?” at the end of sentences that aren’t questions but insults.

Lists colonise the mind and impoverish the imagination.

Elena Gorfinkel’s clarion “Against Lists” remains critically relevant.

Trump administration demands Columbia University:

- put an outside Chair in charge of Eastern, South Asian, and African Studies

- adopt a new definition of antisemitism

- reform admissions

- ban masks

- overhaul student discipline

- comply immediately or risk funding.

U.S. researchers consider

This is a generous gesture but PACA is the heartland of the French far right, and the academy is a perpetual whipping boy for politicians. Anyone who moved would face another set of difficulties.

General practice use of A.I. scribes is already much more widespread than people realise

Legal expert warns patients' medical data at risk as GPs adopt AI scribes - ABC

Excellent points in this article. I’ve directly observed some cavalier attitudes about the use of A.I. scribes, and consent processes aren’t what they’re cracked up to be. I’ve also seen this issue dismissed as “table stakes” in a pollyannaish rush towards imaginary uses for patient recall and enhanced practice profitability.

Health data is amongst the most sensitive data there is. Data governance and data security are major issues with these apps. We shouldn’t be forced into using scribes based on the decisions of practice owners or individual GPs.

Is a calculator a learning tool?

I’m not being rhetorical. The AI-and-calculator metaphor is everywhere in educational assessment discussions at the moment but I’m not convinced it’s a relevant or helpful one.

A better metaphor is probably plastics. A transformative technology that disrupts whole sectors and enables new ones, while also creating problems we don’t envisage or fully appreciate and the impacts of which will be around for centuries.

“The result of Rehberger’s attack is the permanent planting of long-term memories that will be present in all future sessions, opening the potential for the chatbot to act on false information or instructions in perpetuity.” - Ars Technica

A wonderful, detailed article about Fugazi’s music and legacy. They were the first band I every saw live (someone dug up the poster but sadly not the recording) and they remain unparalelled. I’m looking forward to seeing the new We Are Fugazi from Washington D.C. doco.

😳😳😳 Bonkers

The Labs also lead a comprehensive program in nuclear security, focused on reducing the risk of nuclear war and securing nuclear materials and weapons worldwide. This use case is highly consequential, and we believe it is critical for OpenAI to support it as part of our commitment to national security. Our partnership will support this work, with careful and selective review of use cases and consultations on AI safety from OpenAI researchers with security clearances. Open A.I. - Strengthening America’s AI leadership with the U.S. National Laboratories

Take care of yourself, stay cool, and don’t be a numbnut. Check out HeatWatch for evidence-based ways to cool down.

<img src=“https://cdn.uploads.micro.blog/663/2025/screenshot-2025-01-28-at-3.38.55pm.png" width=“595” height=“600” alt=“Screenshot of HeatWatch web page, showing my risk of heat health risk is currently extreme. It suggests “drink water, wear light clothing, rest in the shade, wet your skin, ice in wet towel”.">

Menthol cigarette ban overturned by new U.S. Administration

For those who are wondering where menthol bans are coming from, they’ve been recommended by WHO in line with the Frakework Convention on Tobacco Control. I was hoping governments might tackle ma’assel as well - unlikely now.

Who’s Afraid of the Little Red Book

A really interesting and timely dive into Xiaohongshu/RedNote.

“Who’s Afraid of the Little Red Book” - The China Story by Wing Kuang

In the United States and Australia, Xiaohongshu is widely used by Chinese students studying at universities and young first-generation migrants, who find it useful for locating Chinese restaurants, Asian grocery stores and Chinese-speaking trade services as well as researching immigration policies and sharing their immigration experiences.In Australia, Xiaohongshu attracts almost 700,000 users, including Chinese Australian citizens and temporary residents such as international students. In 2021, I interviewed three Australians who are on Xiaohongshu. Sebastian, who first came across Xiaohongshu through his Chinese partner, treated the platform like a ‘Chinese Instagram’ where he posted his daily outfits in exchange for hundreds of likes – often much more than on his Instagram where he shares the same content.